Glittr stats

In this report you can find some general statistics about Glittr.org. The plots and statistics created are amongst others used in the manuscript. Since Glittr.org is an ongoing project these statistics are updated weekly.

Set up the environment

This is required if you run this notebook locally. Loading required packages.

To run locally, create a file named .env and add your GitHub PAT (variable named PAT ) and google api key (named GOOGLE_API_KEY) in there, e.g.:

# this is an example, store it as .env:

export PAT="ghp_aRSRESCTZII20Lklser3H"

export GOOGLE_API_KEY="AjKSLE5SklxuRsxwPP8s0"Now use your UNIX terminal to source this file to get the keys as objects:

source .envIn R, get environment variables as objects:

pat <- Sys.getenv("PAT")

google_api_key <- Sys.getenv("GOOGLE_API_KEY")

matomo_api_key <- Sys.getenv("MATOMO_API_KEY")Setting colors. These correspond to the category colours on glittr.org.

glittr_cols <- c(

"Scripting and languages" = "#3a86ff",

"Computational methods and pipelines" = "#fb5607",

"Omics analysis" = "#ff006e",

"Reproducibility and data management" = "#ffbe0b",

"Statistics and machine learning" = "#8338ec",

"Others" = "#000000")Parse repository data

Using the glittr.org REST API to get repository metadata, among which the stargazers, recency, category, license and tags.

Code

response <- request("https://glittr.org/api/repositories") |>

req_perform() |>

resp_body_json()

# extract relevant items as dataframe

repo_info_list <- lapply(response$data, function(x) data.frame(

repo = x$name,

author_name = x$author$name,

stargazers = x$stargazers,

recency = x$days_since_last_push,

url = x$url,

license = ifelse(is.null(x$license), "none", x$license),

main_tag = x$tags[[1]]$name,

main_category = x$tags[[1]]$category,

website = x$website,

author_profile = x$author$profile,

author_website = x$author$website

))

repo_info <- do.call(rbind, repo_info_list)

# create a column with provider (either github or gitlab)

repo_info$provider <- ifelse(grepl("github", repo_info$url), "github", "gitlab")

# create a factor for categories for sorting

repo_info$main_category <- factor(repo_info$main_category,

levels = names(glittr_cols))

# category table to keep order the same in the plots

cat_table <- table(category = repo_info$main_category)

cat_table <- sort(cat_table)Number of repositories: 746

Get contributors info

Using the GitHub REST API to get the number of contributors for each repository on glittr.org. This takes a few minutes, so if the contributors haven’t changed, it will use a cached version.

Code

# take long time to run, so try to use cache results if no repos have been

# added in the meantime

# check if data/n_contributors.rds exists

if(file.exists("data/n_contributors.rds")) {

n_contributors <- readRDS("data/n_contributors.rds")

} else {

n_contributors <- NULL

}

# get contributors info only from github repos

repo_info_gh <- repo_info[repo_info$provider == "github", ]

# get contributor info from github api if update is needed

if(!identical(sort(repo_info_gh$repo), sort(names(n_contributors)))) {

dir.create("data", showWarnings = FALSE)

n_contributors <- sapply(repo_info_gh$repo, function(x) {

# get repo contributors

resp <- request("https://api.github.com/repos/") |>

req_url_path_append(x) |>

req_url_path_append("contributors") |>

req_url_query(per_page = 1) |>

req_headers(

Accept = "application/vnd.github+json",

Authorization = paste("Bearer", pat),

`X-GitHub-Api-Version` = "2022-11-28",

) |>

req_perform()

link_url <- resp_link_url(resp, "last")

if(is.null(link_url)) {

return(1)

} else {

npages <- strsplit(link_url, "&page=")[[1]][2] |> as.numeric()

return(npages)

}

})

# overwrite rds file

saveRDS(n_contributors, "data/n_contributors.rds")

}

repo_info_gh$contributors <- n_contributors[repo_info_gh$repo]Get country information

Here we get country information for all authors and organizations. It uses the free text specified at ‘location’. Since this can be anything, we use the google REST API to translate that into country.

Code

# check whether author info exists for caching

if(file.exists("data/author_info.rds")) {

author_info <- readRDS("data/author_info.rds")

author_info_authors <- unique(author_info$author) |> sort()

} else {

author_info_authors <- NULL

}

gh_authors <- repo_info$author_name[repo_info$provider == "github"] |>

unique() |>

sort()

# if the author info is out of date, update it

if(!identical(gh_authors, author_info_authors)) {

author_info_list <- list()

for(author in gh_authors) {

parsed <- request("https://api.github.com/users/") |>

req_url_path_append(author) |>

req_headers(

Accept = "application/vnd.github+json",

Authorization = paste("Bearer", pat),

`X-GitHub-Api-Version` = "2022-11-28",

) |>

req_perform() |>

resp_body_json()

author_info_list[[author]] <- data.frame(

author = parsed$login,

type = parsed$type,

name = ifelse(is.null(parsed$name), NA, parsed$name),

location = ifelse(is.null(parsed$location), NA, parsed$location)

)

}

author_info <- do.call(rbind, author_info_list)

author_info_loc <- author_info[!is.na(author_info$location), ]

author_loc <- author_info_loc$location

names(author_loc) <- author_info_loc$author

ggmap::register_google(key = google_api_key)

loc_info <- ggmap::geocode(author_loc,

output = 'all')

get_country <- function(loc_results) {

if("results" %in% names(loc_results)) {

for(results in loc_results$results) {

address_info <- results$address_components |>

lapply(unlist) |>

do.call(rbind, args = _) |>

as.data.frame()

country <- address_info$long_name[address_info$types1 == "country"]

if (length(country) == 0) next

}

if (length(country) == 0) return(NA)

return(country)

} else {

return(NA)

}

}

countries <- sapply(loc_info, get_country)

names(countries) <- names(author_loc)

author_info$country <- countries[author_info$author]

saveRDS(author_info, "data/author_info.rds")

}

repo_info <- merge(repo_info, author_info, by.x = "author_name",

by.y = "author")

repo_info$country[is.na(repo_info$country)] <- "undefined"- Number of authors: 347

- Number of countries: 29

Parse tag data

Here, we create tag_df that contains information for each tag by using the glittr.org API.

parsed <- request("https://glittr.org/api/tags") |>

req_perform() |>

resp_body_json()

tag_dfs <- list()

for(i in seq_along(parsed)) {

category <- parsed[[i]]$category

name <- sapply(parsed[[i]]$tags, function(x) x$name)

repositories <- sapply(parsed[[i]]$tags, function(x) x$repositories)

tag_dfs[[category]] <- data.frame(name, category, repositories)

}

tag_df <- do.call(rbind, tag_dfs) |> arrange(repositories)Number of tags/topics: 66

Parse outlink data

Here, we use the matomo API to retrieve outlinks to repositories. In this way, we can have an idea which repositories are popular on glittr.org and how often people click on a link. It is summarized over a year (from today).

Code

url <- "https://matomo.sib.swiss/?module=API"

oneyearago <- format(Sys.Date() - 365, "%Y-%m-%d")

# Create and send the request

response <- request(url) |>

req_body_form(

method = "Actions.getOutlinks",

idSite = 217,

format = "json",

date = paste(oneyearago, "today", sep = ","),

period = "range",

expanded = 1,

filter_limit = -1,

token_auth = matomo_api_key

) |>

req_perform() |>

resp_body_json()

## Get outlinks metadata in a dataframe

outlinks_list <- list()

for(domain in response) {

label <- domain$label

url_info <- lapply(domain$subtable, function(x) {

data.frame(

url = ifelse(is.null(x$url),NA , x$url),

nb_visits = ifelse(is.null(x$nb_visits),NA , x$nb_visits),

domain = label

)

})

outlinks_list[[domain$label]] <- do.call(rbind, url_info)

}

outlinks_df <- do.call(rbind, outlinks_list)

row.names(outlinks_df) <- NULL

# function to clean urls for matching

clean_url <- function(url) {

trimws(url) |> gsub("/$", "", x = _) |> tolower()

}

# function to match outlink data with repo and website url per entry

match_url <- function(outlinks_df, repo_info, column = "repo_url") {

url_clean <- clean_url(outlinks_df$url)

column_clean <- clean_url(repo_info[[column]])

outlinks_df[[paste0("is_", column)]] <- url_clean %in% column_clean

outlinks_df[[paste0("ass_repo_", column)]] <- repo_info$repo[match(url_clean,

column_clean)]

return(outlinks_df)

}

# apply the match url function on urls associated with repo entry

for(column in c("url", "website", "author_profile", "author_website")) {

outlinks_df <- match_url(outlinks_df, repo_info, column = column)

}

# filter for only repo url and website (ignore author info)

# check whether associations match

outlinks_df$associated_entry <- outlinks_df |>

select(ass_repo_url, ass_repo_website) |>

apply(1, function(x) {

x <- x[!is.na(x)] |> unique()

if(length(x) == 1) return(x[1])

if(length(x == 0) == 0) return(NA)

if(length(x == 2)) return("do not correspond")

})

# create a list of outlink visits by entry

visits_by_entry <- outlinks_df |>

select(url, nb_visits, associated_entry) |>

filter(!is.na(associated_entry)) |>

group_by(associated_entry) |>

summarise(total_visits = sum(nb_visits))

visits_by_entry <- merge(visits_by_entry, repo_info,

by.x = "associated_entry",

by.y = "repo") |>

arrange(desc(total_visits))

visits_by_namespace <- visits_by_entry |>

select(total_visits, author_name) |>

group_by(author_name) |>

summarise(total_visits = sum(total_visits)) |>

arrange(desc(total_visits))

# visits by tag

visits_by_main_tag <- visits_by_entry |>

select(total_visits, main_tag) |>

group_by(main_tag) |>

summarise(total_visits = sum(total_visits))

# add main category information for plotting

visits_by_main_tag <- merge(visits_by_main_tag, tag_df,

by.x = "main_tag",

by.y = "name") |>

mutate(visits_per_repo = total_visits/repositories) |>

arrange(desc(visits_per_repo))- The number of outlink visits in the last year: 2261

- Number of repositories found using Glittr.org: 244

- Most popular repo was TheAlgorithms/Python with 227 outlinks.

- Most popular namespace was TheAlgorithms with 227 outlinks.

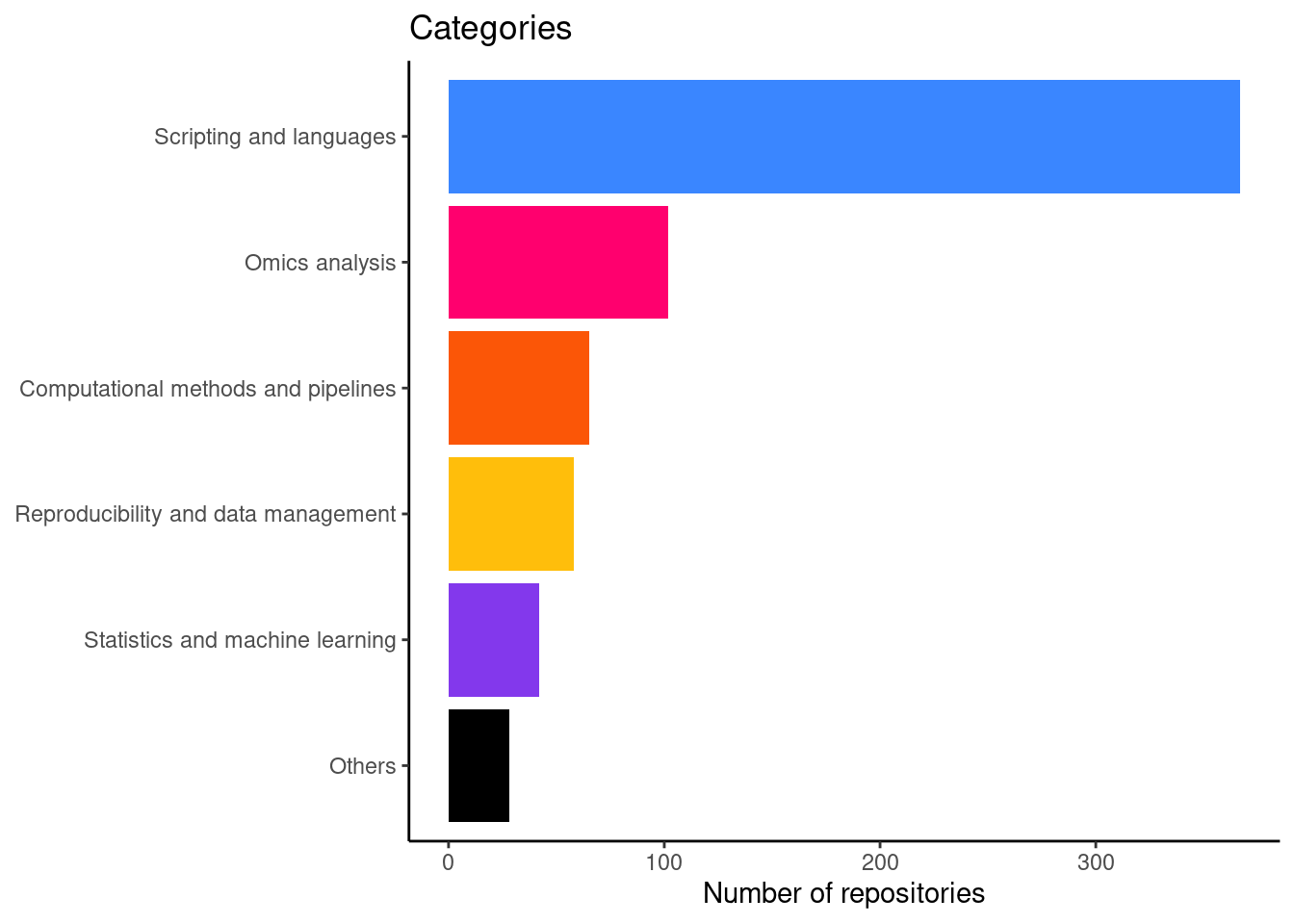

Number of repositories by category

This is figure 2A in the manuscript.

cat_count_plot <- table(category = repo_info$main_category) |>

as.data.frame() |>

ggplot(aes(x = reorder(category, Freq), y = Freq, fill = category)) +

geom_bar(stat = "identity") +

scale_fill_manual(values = glittr_cols) +

coord_flip() +

theme_classic() +

ggtitle("Categories") +

theme(legend.position = "none",

axis.title.y = element_blank()) +

ylab("Number of repositories")

print(cat_count_plot)

And a table with the actual numbers

category_count <- table(category = repo_info$main_category) |> as.data.frame()

knitr::kable(category_count)| category | Freq |

|---|---|

| Scripting and languages | 331 |

| Computational methods and pipelines | 55 |

| Omics analysis | 175 |

| Reproducibility and data management | 51 |

| Statistics and machine learning | 102 |

| Others | 30 |

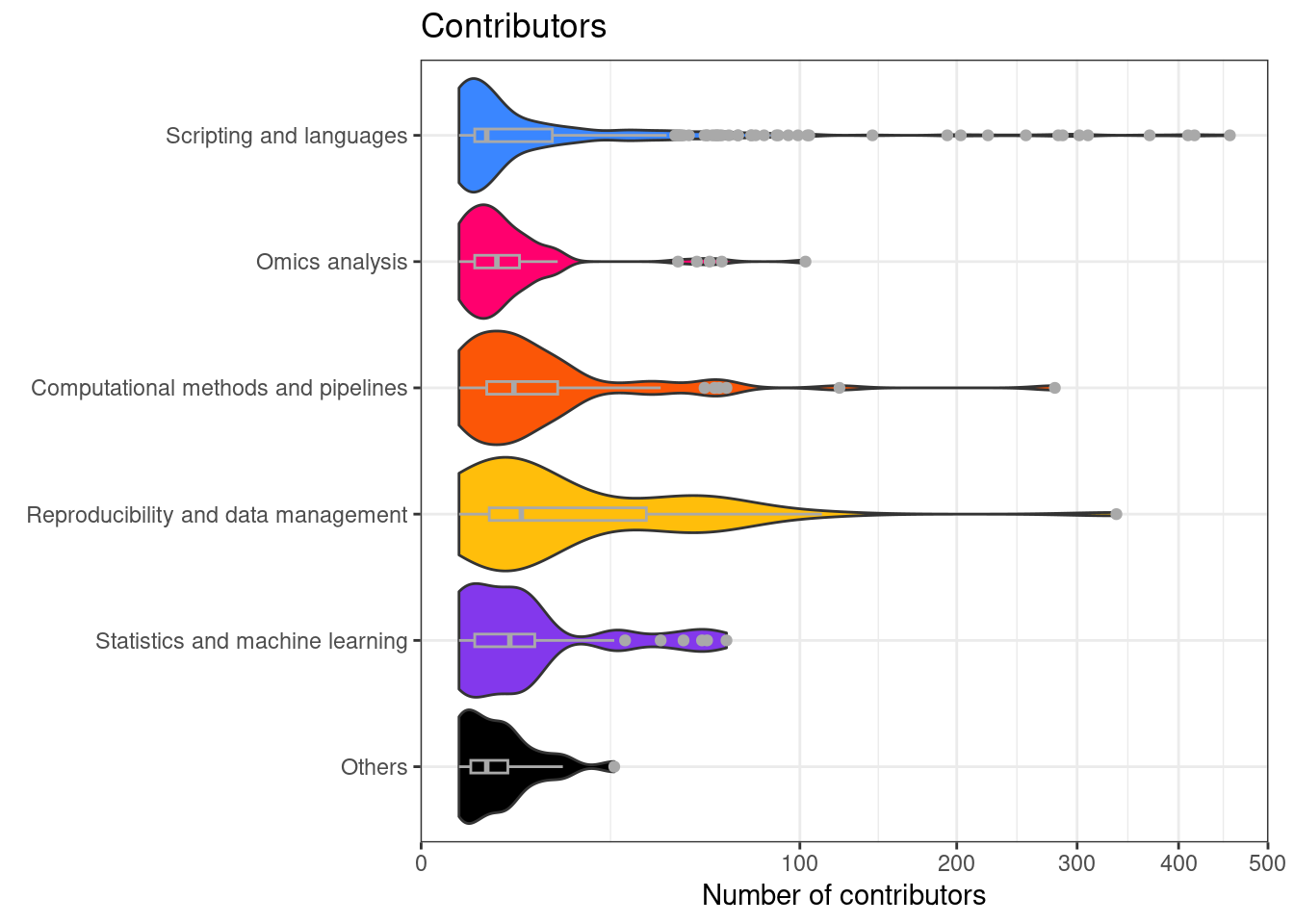

Number of contributors per repository separated by category

This is figure 2B in the manuscript.

repo_info_gh$main_category <- factor(repo_info_gh$main_category,

levels = names(cat_table))

contributors_plot <- repo_info_gh |>

ggplot(aes(x = main_category, y = contributors, fill = main_category)) +

geom_violin(scale = "width") +

geom_boxplot(width = 0.1, col = "darkgrey") +

coord_flip() +

ggtitle("Contributors") +

ylab("Number of contributors") +

scale_y_sqrt() +

scale_fill_manual(values = glittr_cols) +

theme_bw() +

theme(legend.position = "none",

axis.title.y = element_blank(),

plot.margin = margin(t = 5, r = 10, b = 5, l = 10))

print(contributors_plot)

And some statistics of contributors.

- More than 10 contributors: 25.1%

- More than 1 contributor: 79.4%

- Between 1 and 5 contributors: 60.2%

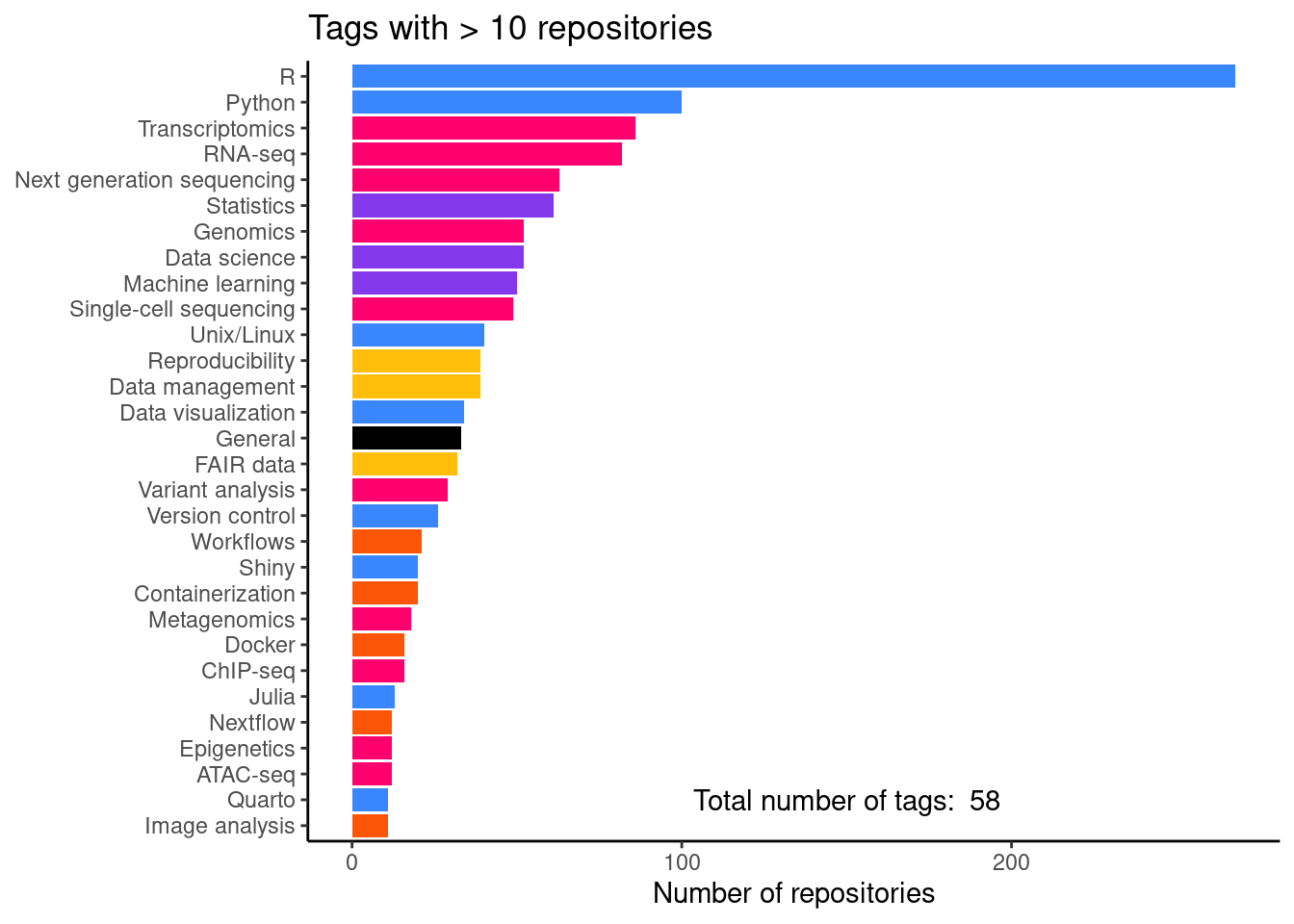

Number of repositories per tag

This is figure 2C in the manuscript.

tag_freq_plot <- tag_df |>

filter(repositories > 10) |>

ggplot(aes(x = reorder(name, repositories),

y = repositories, fill = category)) +

geom_bar(stat = "identity") +

coord_flip() +

scale_fill_manual(values = glittr_cols) +

ggtitle("Tags with > 10 repositories") +

ylab("Number of repositories") +

annotate(geom = "text", x = 2, y = 150,

label = paste("Total number of tags: ",

nrow(tag_df)),

color="black") +

theme_classic() +

theme(legend.position = "none",

axis.title.y = element_blank())

print(tag_freq_plot)And a table with the actual numbers.

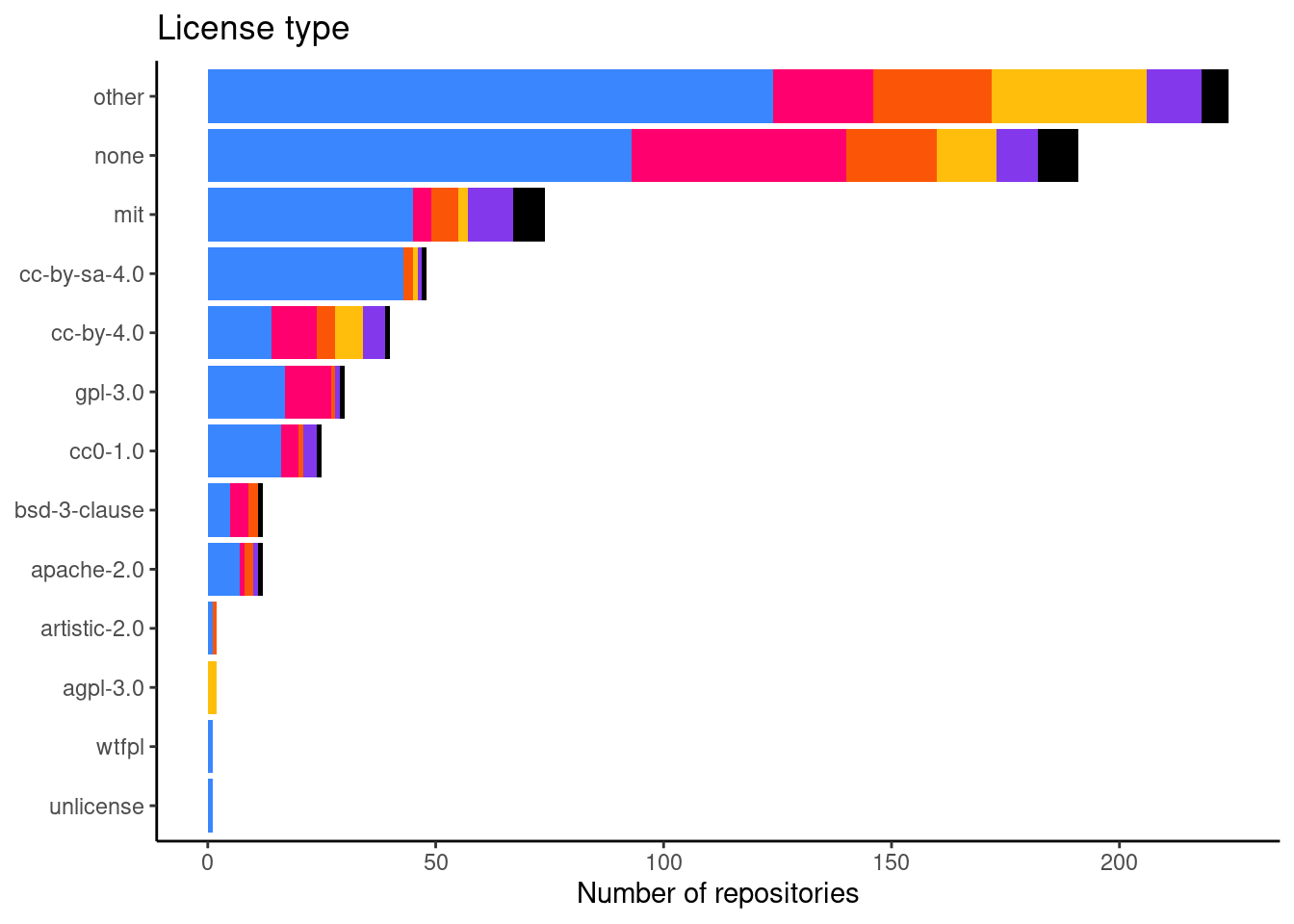

Number of repositories per license

This is figure 2E in the manuscript.

lic_freq_data <- table(license = repo_info$license,

main_category = repo_info$main_category) |>

as.data.frame()

lic_freq_data$main_category <- factor(lic_freq_data$main_category,

levels = names(cat_table))

lic_freq_plot <- lic_freq_data |>

ggplot(aes(x = reorder(license, Freq), y = Freq, fill = main_category)) +

geom_bar(stat = "identity") +

coord_flip() +

scale_fill_manual(values = glittr_cols) +

theme_classic() +

ggtitle("License type") +

ylab("Number of repositories") +

theme(legend.position = "none",

axis.title.y = element_blank())

print(lic_freq_plot)

And a table with the actual numbers.

| Var1 | Freq | perc |

|---|---|---|

| other | 246 | 33.1 |

| none | 210 | 28.2 |

| mit | 86 | 11.6 |

| cc-by-4.0 | 65 | 8.7 |

| cc-by-sa-4.0 | 49 | 6.6 |

| gpl-3.0 | 30 | 4.0 |

| cc0-1.0 | 26 | 3.5 |

| bsd-3-clause | 14 | 1.9 |

| apache-2.0 | 12 | 1.6 |

| agpl-3.0 | 2 | 0.3 |

| artistic-2.0 | 2 | 0.3 |

| unlicense | 1 | 0.1 |

| wtfpl | 1 | 0.1 |

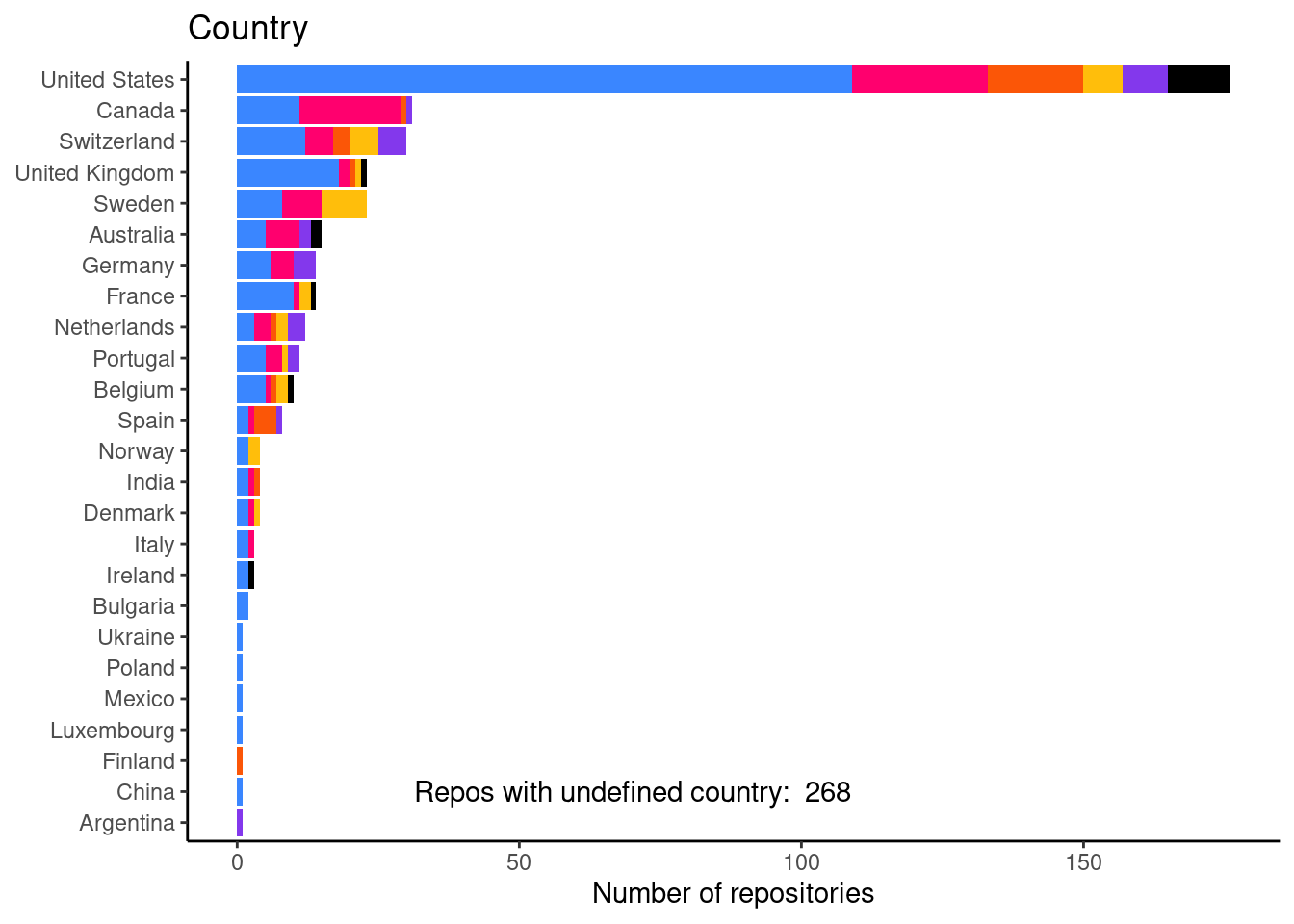

Number of repositories per country

This is figure 2F in the mansucript.

country_freq <- table(country = repo_info$country,

main_category = repo_info$main_category) |>

as.data.frame()

country_freq$main_category <- factor(country_freq$main_category,

levels = names(cat_table))

country_freq_plot <- country_freq |>

filter(country != "undefined") |>

ggplot(aes(x = reorder(country, Freq), y = Freq, fill = main_category)) +

geom_bar(stat = "identity") +

coord_flip() +

ggtitle("Country") +

ylab("Number of repositories") +

scale_fill_manual(values = glittr_cols) +

annotate(geom = "text", x = 2, y = 70,

label = paste("Repos with undefined country: ",

sum(repo_info$country == "undefined")),

color="black") +

theme_classic() +

theme(legend.position = "none",

axis.title.y = element_blank())

print(country_freq_plot)

And a table with the actual numbers.

repo_info$country |>

table() |>

as.data.frame() |>

arrange(desc(Freq)) |>

knitr::kable()| Var1 | Freq |

|---|---|

| undefined | 298 |

| United States | 194 |

| Switzerland | 44 |

| Canada | 33 |

| United Kingdom | 28 |

| Sweden | 25 |

| Australia | 18 |

| Belgium | 15 |

| Germany | 14 |

| France | 13 |

| Netherlands | 12 |

| Portugal | 11 |

| Spain | 8 |

| Denmark | 5 |

| India | 4 |

| Norway | 4 |

| Italy | 3 |

| Bulgaria | 2 |

| Ireland | 2 |

| Luxembourg | 2 |

| Argentina | 1 |

| China | 1 |

| Czechia | 1 |

| Estonia | 1 |

| Finland | 1 |

| Mexico | 1 |

| Poland | 1 |

| South Africa | 1 |

| Ukraine | 1 |

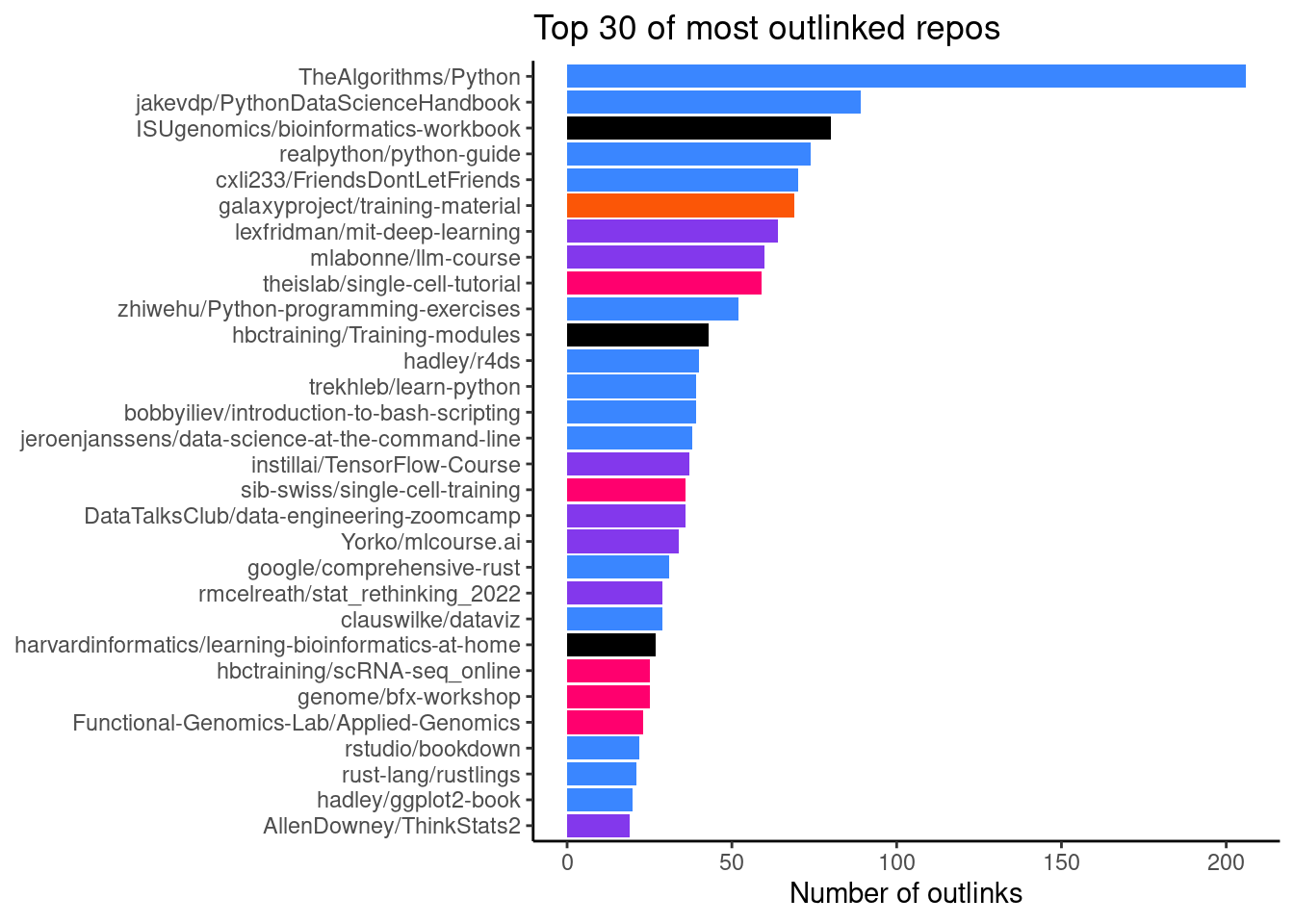

Number of outlinks by repo

Here, we use the site data from matomo in order to investigate what visitors of Glittr.org have clicked on. This gives us an idea about which repositories and topics are popular among users. In Figure 7 we see that TheAlgorithms/Python was the most popular repository with 227 clicks.

visits_by_entry |>

slice(1:30) |>

ggplot(aes(x = reorder(associated_entry, total_visits),

y = total_visits, fill = main_category)) +

geom_bar(stat = "identity") +

coord_flip() +

scale_fill_manual(values = glittr_cols) +

ggtitle("Top 30 of most outlinked repos") +

ylab("Number of outlinks") +

theme_classic() +

theme(legend.position = "none",

axis.title.y = element_blank())

| repository | total_visits |

|---|---|

| TheAlgorithms/Python | 227 |

| mlabonne/llm-course | 84 |

| jakevdp/PythonDataScienceHandbook | 68 |

| galaxyproject/training-material | 66 |

| ISUgenomics/bioinformatics-workbook | 63 |

| gladstone-institutes/Bioinformatics-Workshops | 54 |

| theislab/single-cell-tutorial | 52 |

| bobbyiliev/introduction-to-bash-scripting | 43 |

| hbctraining/Intro-to-scRNAseq | 43 |

| cxli233/FriendsDontLetFriends | 42 |

| realpython/python-guide | 42 |

| pachterlab/BI-BE-CS-183-2023 | 41 |

| sib-swiss/single-cell-training | 37 |

| zhiwehu/Python-programming-exercises | 32 |

| hbctraining/Training-modules | 30 |

| DataTalksClub/data-engineering-zoomcamp | 29 |

| rust-lang/rustlings | 28 |

| griffithlab/rnabio.org | 27 |

| lexfridman/mit-deep-learning | 27 |

| trekhleb/learn-python | 27 |

| theislab/single-cell-best-practices | 26 |

| harvardinformatics/learning-bioinformatics-at-home | 25 |

| hadley/ggplot2-book | 22 |

| hadley/r4ds | 22 |

| hbctraining/Intro-to-bulk-RNAseq | 22 |

| telatin/microbiome-bioinformatics | 22 |

| Yorko/mlcourse.ai | 22 |

| biotrain-latam/BiotrAIn-pilot-course | 21 |

| NeuromatchAcademy/course-content | 21 |

| cmdcolin/awesome-genome-visualization | 20 |

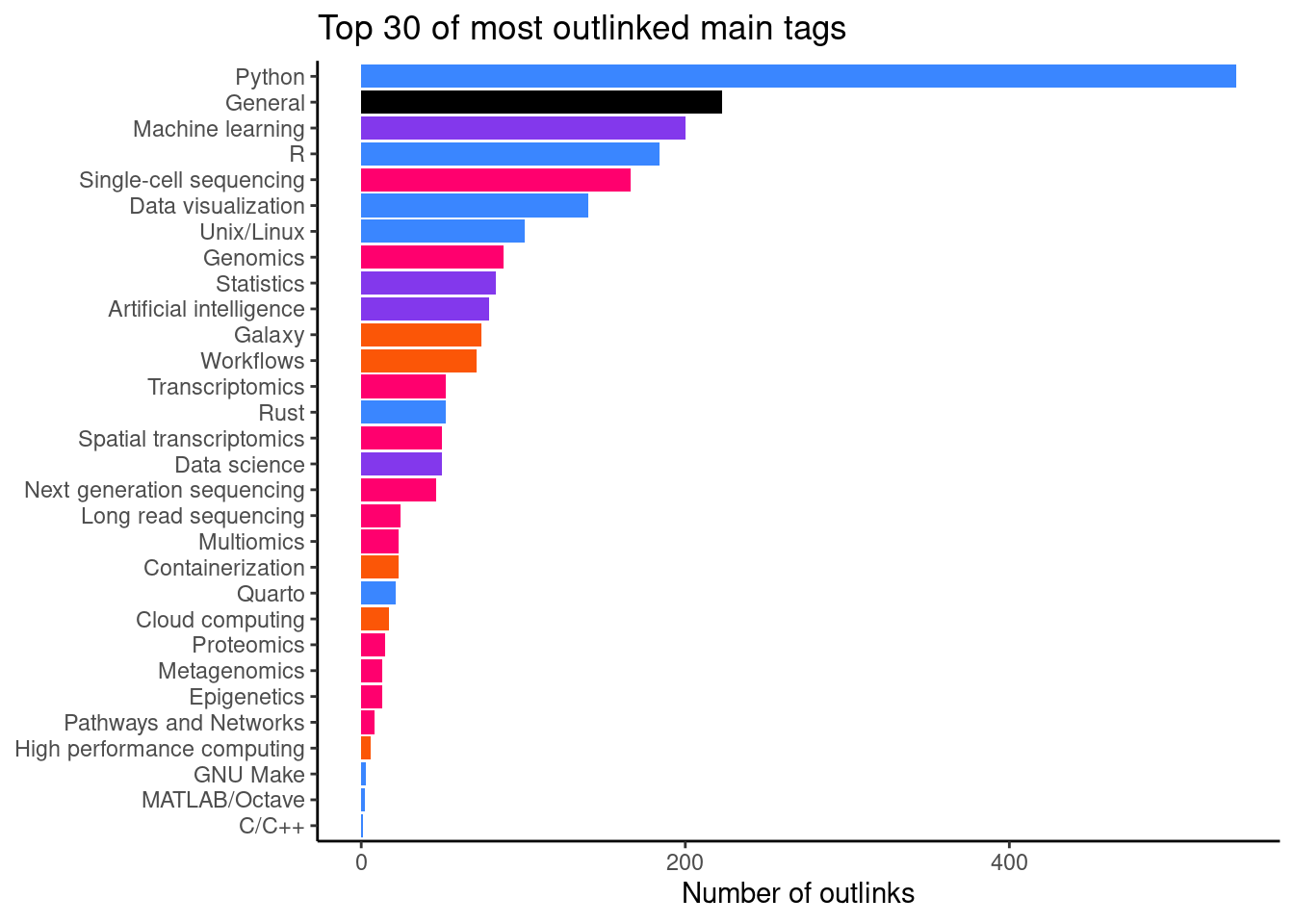

It becomes more interesting when we aggregate the data per main tag (Figure 8). This gives us an idea of topic which people are looking for when visiting glittr.org. The most popular topic is Python with 465 outlinks.

visits_by_main_tag |>

slice(1:30) |>

ggplot(aes(x = reorder(main_tag, total_visits),

y = total_visits, fill = category)) +

geom_bar(stat = "identity") +

coord_flip() +

scale_fill_manual(values = glittr_cols) +

ggtitle("Top 30 of most outlinked main tags") +

ylab("Number of outlinks") +

theme_classic() +

theme(legend.position = "none",

axis.title.y = element_blank())

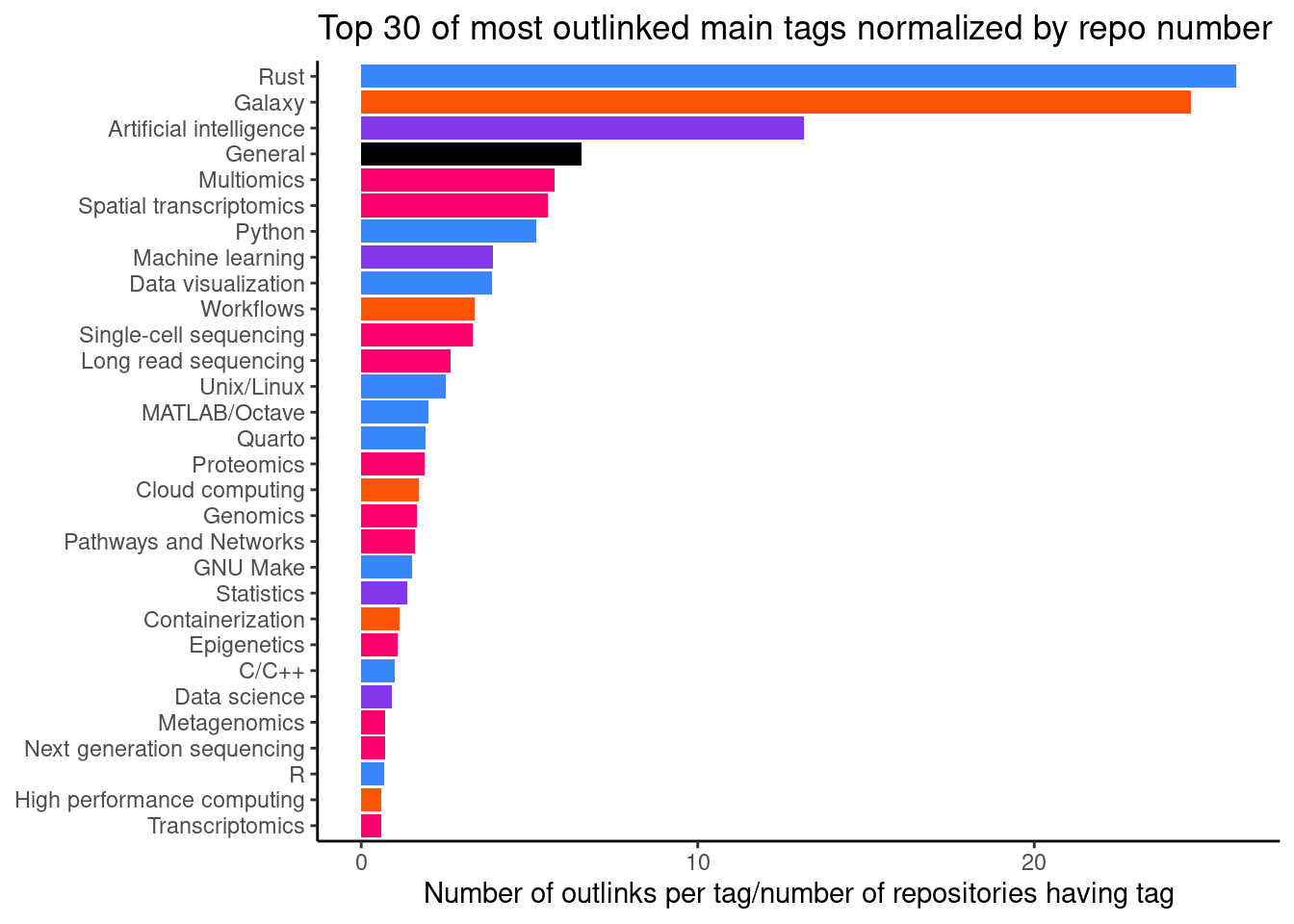

When we normalize the visits by number of repositories per tag (Figure 9), we can get an idea where training materials might be lacking. A high number here show repositories that are quite unique in what they teach, but are still popular. Here, we see that there are 4 repositires with the main tag Large language models with an average of 25.8 outlinks.

visits_by_main_tag |>

arrange(desc(visits_per_repo)) |>

slice(1:30) |>

ggplot(aes(x = reorder(main_tag, visits_per_repo),

y = visits_per_repo, fill = category)) +

geom_bar(stat = "identity") +

coord_flip() +

scale_fill_manual(values = glittr_cols) +

ggtitle("Top 30 of most outlinked main tags normalized by repo number") +

ylab("Number of outlinks per tag/number of repositories having tag") +

theme_classic() +

theme(legend.position = "none",

axis.title.y = element_blank())

| main_tag | total_visits | category | repositories | visits_per_repo |

|---|---|---|---|---|

| Large language models | 103 | Statistics and machine learning | 4 | 25.7500000 |

| Rust | 44 | Scripting and languages | 2 | 22.0000000 |

| Galaxy | 66 | Computational methods and pipelines | 5 | 13.2000000 |

| Protein structure | 19 | Omics analysis | 2 | 9.5000000 |

| General | 303 | Others | 35 | 8.6571429 |

| Python | 465 | Scripting and languages | 115 | 4.0434783 |

| Single-cell sequencing | 222 | Omics analysis | 56 | 3.9642857 |

| Multiomics | 21 | Omics analysis | 6 | 3.5000000 |

| Proteomics | 22 | Omics analysis | 8 | 2.7500000 |

| Spatial transcriptomics | 46 | Omics analysis | 18 | 2.5555556 |

| Unix/Linux | 106 | Scripting and languages | 44 | 2.4090909 |

| Data visualization | 97 | Scripting and languages | 43 | 2.2558140 |

| LaTeX | 2 | Scripting and languages | 1 | 2.0000000 |

| Artificial intelligence | 27 | Statistics and machine learning | 14 | 1.9285714 |

| Metagenomics | 34 | Omics analysis | 18 | 1.8888889 |

| Machine learning | 95 | Statistics and machine learning | 60 | 1.5833333 |

| Transcriptomics | 123 | Omics analysis | 95 | 1.2947368 |

| Workflows | 31 | Computational methods and pipelines | 24 | 1.2916667 |

| Genomics | 65 | Omics analysis | 58 | 1.1206897 |

| GNU Make | 3 | Scripting and languages | 3 | 1.0000000 |

| Epigenetics | 9 | Omics analysis | 10 | 0.9000000 |

| High performance computing | 11 | Computational methods and pipelines | 13 | 0.8461538 |

| Long read sequencing | 8 | Omics analysis | 10 | 0.8000000 |

| Statistics | 54 | Statistics and machine learning | 69 | 0.7826087 |

| Pathways and Networks | 6 | Omics analysis | 8 | 0.7500000 |

| Variant analysis | 25 | Omics analysis | 35 | 0.7142857 |

| Image analysis | 8 | Computational methods and pipelines | 13 | 0.6153846 |

| Next generation sequencing | 42 | Omics analysis | 72 | 0.5833333 |

| Data science | 34 | Statistics and machine learning | 62 | 0.5483871 |

| Java | 1 | Scripting and languages | 2 | 0.5000000 |

We can also check popularity by namespace, i.e. author (Table 8).

| author_name | total_visits |

|---|---|

| TheAlgorithms | 227 |

| sib-swiss | 175 |

| hbctraining | 121 |

| mlabonne | 84 |

| theislab | 78 |

| NBISweden | 72 |

| jakevdp | 68 |

| galaxyproject | 66 |

| ISUgenomics | 63 |

| hadley | 57 |

| gladstone-institutes | 54 |

| bobbyiliev | 43 |

| cxli233 | 42 |

| realpython | 42 |

| pachterlab | 41 |

| griffithlab | 36 |

| zhiwehu | 32 |

| DataTalksClub | 29 |

| carpentries-incubator | 28 |

| rust-lang | 28 |

| lexfridman | 27 |

| trekhleb | 27 |

| harvardinformatics | 25 |

| carpentries-lab | 23 |

| Yorko | 22 |

| telatin | 22 |

| NeuromatchAcademy | 21 |

| biotrain-latam | 21 |

| bioinformatics-core-shared-training | 20 |

| cmdcolin | 20 |

Summary plot

Full figure 2 of the manuscript.