Setup & quality control

Presentations

Exercises

First login

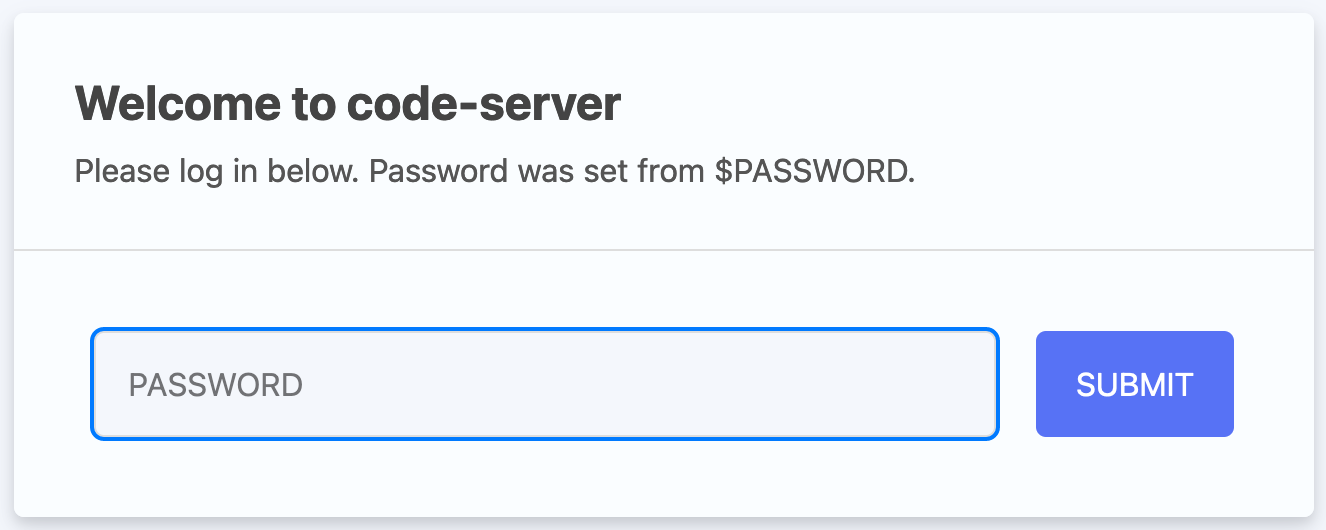

If you are participating in this course with a teacher, you have received a link and a password. Copy-paste the link (including the port, e.g.: http://12.345.678.91:10002) in your browser. This should result in the following page:

The link gives you access to a web version of Visual Studio Code. This is a powerful code editor that you can also use as a local application on your computer.

Type in the password that was provided to you by the teacher. Now let’s open the terminal. You can do that by clicking Application menu > Terminal > New Terminal:

For a.o. efficiency and reproducibility it makes sense to execute your commands from a script. With use of the ‘new file’ button:

Setup

We will start the exercises with pre-aligned bam files. We have already preapred the folders for you with all the data that you need. If you are following the course later on:

You can create a symbolic link to the parent /data directory into your project directory project/, this simplify the path organization

cd ~/project

ln -s /data .This allows you to browse the data using VSCode’s file explorer within your project.

To start, download and extract to the into a folder, and change your paths to your folder:

mkdir -p ~/project

cd ~/project

wget https://cancer-variants-training.s3.eu-central-1.amazonaws.com/course_data.tar.gz

tar -xvzf course_data.tar.gz

mv course_data data

rm course_data.tar.gzThen update your paths, depending on where your data is:

PROJECT=~/project/

ALIGNDIR=~/project/data/alignments

REFDIR=~/project/data/reference

RESOURCEDIR=~/project/data/resources

VEPDBS=~/project/data/VEP_dbsNow, check out the directory ~/project/data and see what’s in there (e.g. with tree):

└── ~/project/data

├── alignments

│ ├── normal.recal.bai

│ ├── normal.recal.bam

│ ├── tumor.recal.bai

│ └── tumor.recal.bam

├── reads

│ ├── normal_R1.fastq.gz

│ ├── normal_R2.fastq.gz

│ ├── tumor_R1.fastq.gz

│ └── tumor_R2.fastq.gz

├── reference

│ ├── exome_regions.bed.interval_list

│ ├── ref_genome.dict

│ ├── ref_genome.fa

│ ├── ref_genome.fa.fai

│ └── ref_genome.fa.gz

├── resources

│ ├── 1000G_phase1.snps.high_confidence.hg38.subset.vcf.gz

│ ├── 1000G_phase1.snps.high_confidence.hg38.subset.vcf.gz.tbi

│ ├── 1000G_phase1.snps.high_confidence.hg38.vcf.gz

│ ├── 1000G_phase1.snps.high_confidence.hg38.vcf.gz.tbi

│ ├── 1000g_pon.hg38.subset.vcf.gz

│ ├── 1000g_pon.hg38.subset.vcf.gz.tbi

│ ├── 1000g_pon.hg38.vcf.gz

│ ├── 1000g_pon.hg38.vcf.gz.tbi

│ ├── af-only-gnomad.hg38.subset.vcf.gz

│ ├── af-only-gnomad.hg38.subset.vcf.gz.tbi

│ ├── af-only-gnomad.hg38.vcf.gz

│ ├── af-only-gnomad.hg38.vcf.gz.tbi

│ ├── Mills_and_1000G_gold_standard.indels.hg38.subset.vcf.gz

│ ├── Mills_and_1000G_gold_standard.indels.hg38.subset.vcf.gz.tbi

│ ├── Mills_and_1000G_gold_standard.indels.hg38.vcf.gz

│ ├── Mills_and_1000G_gold_standard.indels.hg38.vcf.gz.tbi

│ ├── refFlat.txt

│ └── refFlat.txt.gz.1

└── VEP_dbs

├── alphamissense

│ ├── AlphaMissense_hg38.tsv.gz

│ └── AlphaMissense_hg38.tsv.gz.tbi

├── clinvar

│ ├── clinvar.vcf.gz

│ └── clinvar.vcf.gz.tbi

└── revel

├── new_tabbed_revel_grch38.tsv.gz

├── new_tabbed_revel_grch38.tsv.gz.tbi

└── new_tabbed_revel.tsv.gz

9 directories, 38 filesShowing us that we have three directories:

alignments: containing bam files of tumor and normalreference: containing the genome fasta file and target intervalsresources: containing amongst other variant files (vcf) from among other the 1000 genomes project and gnomADVEP_dbs: Clinvar and alphamissense and revel databases for usage with VEP.

The dataset we’re working with is prepared by the developers of the Precision medicine bioinformatics course by the Griffith lab. It is whole exome sequencing (WES) data of cell lines derived from a tumor of triple negative breast cancer (TNBC) (HCC1395) and derived from normal tissue (HCC1395 BL).

You are strongly encouraged to your work with scripts during the course, which you store in the directory scripts. Therefore create a scripts directory:

cd ~/project/

mkdir scripts Quality control of the bam files

First we use the information in the header to get information about the contents of the bam file and how it was generated.

In somatic variant analysis, we’re looking for variants present in tumor but not normal tissue. These are often present at low allele frequencies (especially in heterogeneous tumors or with contamination). Therefore:

- Coverage depth matters: Low-frequency variants (5-10% VAF) need >100x coverage for reliable detection

- Duplicate marking is critical: PCR duplicates can create false variants or inflate allele frequencies

- On-target rates: Poor capture efficiency means lower power to detect somatic mutations in genes of interest

- Contamination: Normal cell contamination in tumor samples dilutes somatic variants

Check out the contents of the headers of the bam files with samtools view -H, and answer the following questions:

- How is the bam file sorted?

- Which chromosomes were used as reference?

- How many read groups are in there, what is the readgroup ID and what is the sample name?

- Which aligner was used?

- If there are duplicates in there, how are they marked?

- Which other programs were run to create the bam file?

To get information on the bam header we run

ALIGNDIR=~/project/data/alignments

samtools view -H "$ALIGNDIR"/normal.recal.bamWhich returns:

@HD VN:1.6 SO:coordinate

@SQ SN:chr6 LN:170805979

@SQ SN:chr17 LN:83257441

@RG ID:HWI-ST466.C1TD1ACXX.normal LB:normal PL:ILLUMINA SM:normal PU:HWI-ST466.C1TD1ACXX

@PG ID:bwa PN:bwa VN:0.7.17-r1188 CL:bwa mem /config/data/reference//ref_genome.fa /config/data/reads/normal_R1.fastq.gz /config/data/reads/normal_R2.fastq.gz

@PG ID:samtools PN:samtools PP:bwa VN:1.21 CL:samtools sort

@PG ID:samtools.1 PN:samtools PP:samtools VN:1.21 CL:samtools view -bh

@PG ID:MarkDuplicates VN:Version:4.5.0.0 CL:MarkDuplicates --INPUT /config/data/alignments/normal.rg.bam --OUTPUT /config/data/alignments/normal.rg.md.bam --METRICS_FILE /config/data/alignments/marked_dup_metrics_normal.txt ... PN:MarkDuplicates

@PG ID:GATK ApplyBQSR VN:4.5.0.0 CL:ApplyBQSR --output /config/project/data/alignments/.recal.bam --bqsr-recal-file /config/project/data/alignments/normal.bqsr.recal.table --input /config/project/data/alignments/normal.rg.md.bam ... PN:GATK ApplyBQSR

@PG ID:samtools.2 PN:samtools PP:samtools.1 VN:1.21 CL:samtools view -H tumor.recal.bam- From the

@HDtag, we can see that the bam file is sorted by coordinate. - We have two lines starting with

@SQ, which gives us information about the reference used. The reference genome used contains chromosomes 6 and 17. - We have one line starting with

@RG. Specifying there is a read group with IDHWI-ST466.C1TD1ACXX.normaland sample name (SM)normal. - In order to know which programs were run to generate this bam file, we can use the

@PGtags. Here, we see that the aligner used wasbwa mem - The duplicates were marked with

MarkDuplicates. - To sort and compress

samtools sortandsamtools viewwere used. For base quality score recalibration (BQSR)GATK ApplyBQSRwas used.

Now we extract information from the alignments themselves. We first have a look at few alignments and then get a summary of the alignments with samtools flagstat.

Check out the first few alignments with samtools view and head.

- What is likely the read length used?

- Are the reads single-end or paired-end?

- Are the first reads tagged with the readgroup ID?

The command:

ALIGNDIR=~/project/data/alignments

samtools view "$ALIGNDIR"/normal.recal.bam | head -3Returns the first three alignments:

HWI-ST466:135068617:C1TD1ACXX:7:1114:9733:82689 163 chr6 60001 60 100M = 60106 205 GATCTTATATAACTGTGAGATTAATCTCAGATAATGACACAAAATATAGTGAAGTTGGTAAGTTATTTAGTAAAGCTCATGAAAATTGTGCCCTCCATTC ;B@EDB@A@A@DDDGBGBFCBC?DBEEGCGCAADAGCECDCDDDBABAGBECEGCBHH@?EGBCBCDDBHBAEEHGDFBBHBDDDBCHBGEFFEGEDBBG MC:Z:100M MD:Z:100 PG:Z:MarkDuplicates RG:Z:HWI-ST466.C1TD1ACXX.normal NM:i:0 AS:i:100 XS:i:0

HWI-ST466:135068617:C1TD1ACXX:7:1303:2021:90688 99 chr6 60001 60 100M = 60104 203 GATCTTATATAACTGTGAGATTAATCTCAGATAATGACACAAAATATAGTGAAGTTGGTAAGTTATTTAGTAAAGCTCATGAAAATTGTGCCCTCCATTC >A@FDB@A?@>DDCE@FAF@?BAC>ECFCGBAADBEADBDCDDD@@A@FAFADD?ABF@@EF?@ABCDAFB?DCGEBEB@EBCDCBCHBFEFFDGFCBBG MC:Z:100M MD:Z:100 PG:Z:MarkDuplicates RG:Z:HWI-ST466.C1TD1ACXX.normal NM:i:0 AS:i:100 XS:i:0

HWI-ST466:135068617:C1TD1ACXX:7:2304:7514:30978 113 chr6 60001 60 2S98M = 61252 1194 TAGATCTTATATAACTGTGAGATTAATCTCAGATAATGACACAAAATATAGTGAAGTTGGTAAGTTATTTAGTAAAGCTCATGAAAATTGTGCCCTCCAT >DHABFEACBBBCBGCECHEGBEACBDHCGCHCBDBBFAEAGBCCB@BAEECGBEEDBED>@EDDABDDADE@CBDFFBFBCFCCBADBDBDFFFAFF?@ MC:Z:60S40M MD:Z:98 PG:Z:MarkDuplicates RG:Z:HWI-ST466.C1TD1ACXX.normal NM:i:0 AS:i:98 XS:i:0- At the CIGAR strings we see

100Mand2S98M, meaning that the original read had 100 base pairs. So it’s likely a read length of 100 bp has been used. - This question might be a bit more challenging. In the 5th column we see an equal sign (

=). This shows that the mate is mapped to same chromosome, so suggesting we are working with paired-end reads. Secondly, at the@PGheader tag, we saw thatbwa memtook two fastq files (normal_R1.fastq.gzandnormal_R2.fastq.gz) as input. Lastly, we can use the sam flags in the second column to figure that out. If you paste the first flag,163, in the explain sam flags website, we can see that the read is paired. - Yes, the read group tag starts with

RG, and is specified for all three alignments.

Create a script called 01_samtools_flagstat.sh and store it in ~/project/scripts. Use samtools flagstat to summarize the alignments in both bam files. How many reads are in the bam files? And how many alignments are marked as duplicate?

ALIGNDIR=~/project/data/alignments

PROJECT=~/project/alignments

mkdir -p $PROJECT

for sample in tumor normal

do

# Run flagstat on the BAM file in /data and save to ~/project

samtools flagstat $ALIGNDIR/"$sample".recal.bam > "$PROJECT"/"$sample".recal.bam.flagstat

donereturns for normal.recal.bam:

12744793 + 0 in total (QC-passed reads + QC-failed reads)

12733034 + 0 primary

0 + 0 secondary

11759 + 0 supplementary

1397598 + 0 duplicates

1397598 + 0 primary duplicates

12671529 + 0 mapped (99.43% : N/A)

12659770 + 0 primary mapped (99.42% : N/A)

12733034 + 0 paired in sequencing

6366517 + 0 read1

6366517 + 0 read2

12515974 + 0 properly paired (98.30% : N/A)

12586532 + 0 with itself and mate mapped

73238 + 0 singletons (0.58% : N/A)

20414 + 0 with mate mapped to a different chr

13194 + 0 with mate mapped to a different chr (mapQ>=5)Showing us that we have 12,744,793 reads, and all those were paired. Of the alignments, 1,397,598 were marked as duplicate. If we do the same for the tumor bam file we see that it has 16,674,562 reads and 1,871,521 alignment marked as duplicate.

So, all looks good until now. We have many reads and high alignment rates. The bam files seem to be ready for variant analysis, because they have read groups, duplicates are marked and they are sorted by coordinate. However, since we are working with whole exome sequencing (WES) data, we would like to know whether the reads align to the target regions and what kind of coverage we have. For this, we use gatk CollectHsMetrics.

Create a script called 02_gatk_collecthsmetrics.sh in ~/project/scripts to run gatk CollectHsMetrics on the two bam files. Here’s an example:

PROJECT=~/project/alignments

ALIGNDIR=~/project/data/alignments

REFDIR=~/project/data/reference

mkdir -p $PROJECT

for sample in tumor normal

do

gatk CollectHsMetrics \

-I "$ALIGNDIR"/"$sample".recal.bam \

-O "$PROJECT"/"$sample".recal.bam_hs_metrics.txt \

-R "$REFDIR"/ref_genome.fa \

--BAIT_INTERVALS "$REFDIR"/exome_regions.bed.interval_list \

--TARGET_INTERVALS "$REFDIR"/exome_regions.bed.interval_list

done The produced reports are not so easy to read. To parse the metrics file, we run multiqc on the directory:

MULTIQC=~/project/multiqc

PROJECT=~/project/alignments

mkdir -p $MULTIQC

multiqc $PROJECT -o $MULTIQCDownload the multiqc report (multiqc_report.html) by right-clicking on the file and select ‘Download’. Check out the hybrid-selection metrics or by clicking here: multiqc report . How did the hybrid capture go? Are most bases on-target? What kind of coverages can we expect in the target regions?

You can find the documentation on the different metrics here. Note that the descriptions of the metrics are not always correct. The metrics with ON_BAIT contain information including duplicates, while ON_TARGET without duplicates. This has been an issue since 2020, and hasn’t been worked on unfortunately.

We see that we have a fold enrichment of around 22 and about 45% usable bases on-target. About 90% were ‘selected bases’, which means bases aligning within 250 bp of the target region. We’re looking at a de-duplicated coverage of 84x for the normal sample and 114.3x for the tumor sample. So, on average, we seem to have acceptable coverages for both samples.

Notice that tumor coverage (114x) is higher than normal (84x). This is intentional in cancer studies because:

- Tumor samples may have subclonal populations with low-frequency variants

- Tumor purity varies: A deeper coverage is needed to detect variants in impure samples

- Generally aim for 2-3x higher coverage in tumor than matched normal